Following the conference I presented in 2015 at the Moscow State Linguistic University Centre for Socio-Cognitive Discourse Studies (SCoDis) (Laboratory PoliMod), I’m organizing a workshop this year about gesture acquisition in realtime and annotation with linguist.

MeMuMo: Methods in Multimodal Communication Research – New recording methods for motion capture – Kinect

Leaders: Jean-François Jégo & Dominique Boutet. Languages: English (French available)

« No, Cassius. The eye can’t see itself, except by reflection in other surfaces. » Jul. Ceas., Act 1, Scen 2, W. Shakespeare. Video is still the classic way to capture movements and to analyze gestures using a series of still images. However, this technique holds some difficulties to extract the data, especially since the point of view is fixed and mainly based on visual observation of the movement. Motion Capture (Mocap) has the advantage to capture three-dimensional data, including the possibility to change the point of view. It allows as well to extract more abstract movement descriptors such as speed or acceleration of the different body parts.

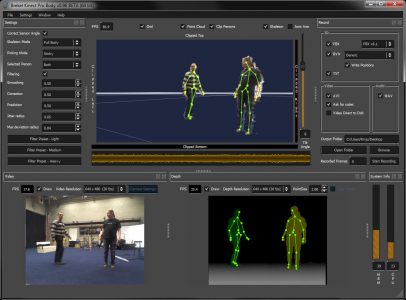

In this workshop, we propose to investigate and practice new recording methods for Motion Capture, focusing on marker-less and low cost devices (such as the Microsoft Kinect camera). We will practice a “new” gesture analysis pipeline, starting from Mocap of short talking situations played by the participants of the workshop. We will then conduct a simple gesture analysis importing Mocap data in the Elan software. We propose at last to ask ourselves about what the Mocap changes, offers and allows (or not), emancipating somewhat from the visual modality as a unique way to understand gestures.